Understanding Immigration Discourse on Reddit

A Multi-Label Frame Classification Study Using Fine-Tuned BERT

Introduction & Motivation

Why Immigration Discourse Matters

Public immigration discourse has long mirrored broader societal tensions. Across modern nations, immigration often functions as a proxy debate that stands in for concerns about economic insecurity, national identity, and globalization. In the United States, this discussion is further magnified by an evolving multimedia ecosystem in which online platforms both reflect and reshape public opinion.

Social media, in particular, has amplified how citizens frame immigration—whether they express positive or negative sentiments, and how they define immigrants as economic contributors, cultural outsiders, or humanitarian subjects. These frames influence real-world policy outcomes, from visa allocations to refugee programs, and they directly affect the well-being of immigrant communities.

Why Reddit?

While platforms such as X (formerly Twitter) and Facebook have been extensively studied for their influence on immigration narratives, Reddit remains comparatively underexamined. Its unique characteristics make it an ideal research environment:

- Semi-Anonymous Structure: Users can express views more freely without personal identity exposure

- Community-Driven Moderation: Each subreddit develops its own norms and rules

- Upvote/Downvote System: Community consensus emerges through voting mechanisms

- Network of Subcommunities: Reddit operates as interconnected spaces rather than a single public square

Research Questions

Our Approach: We move beyond traditional sentiment analysis (positive/negative) to trace how Reddit users define the problems, causes, and moral narratives surrounding immigration during periods of upheaval.

Major Events We Examine

2016 Presidential Election

Trump's victory and the rise of "build the wall" rhetoric fundamentally shifted immigration discourse

2020 George Floyd Protests

Racial justice movements intersected with immigration debates around enforcement and discrimination

COVID-19 Pandemic

Public health concerns merged with immigration policy, border closures, and xenophobia

2022 Midterm Elections

Republicans regained House control with immigration as a central campaign issue

Research Objectives

Contributions to the Field

Our project demonstrates how AI techniques can extend traditional communication research. By leveraging generative AI for frame classification and narrative synthesis, we provide:

- Methodological Innovation: First large-scale computational frame analysis of Reddit immigration discourse

- Theoretical Advancement: Validation that political discourse is multi-dimensional (avg 1.41 frames per post)

- Practical Tools: Scalable methods for monitoring public discourse and democratic resilience

- Data Requirements: Empirical thresholds for machine learning frame detection (~50-70 examples minimum)

Project Overview: By the Numbers

Methodology: A Multi-Stage Approach

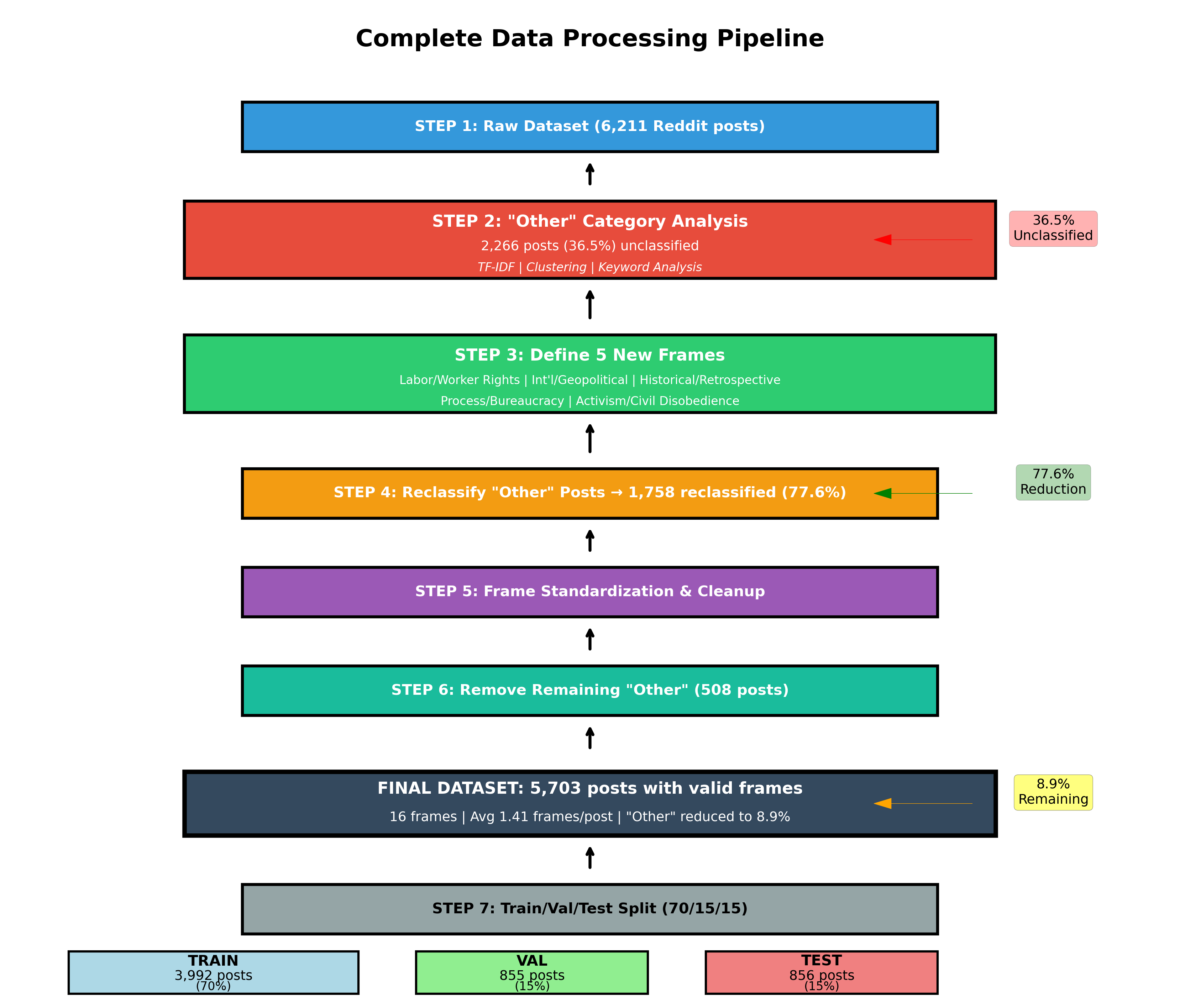

Stage 1: Data Collection & Initial Classification

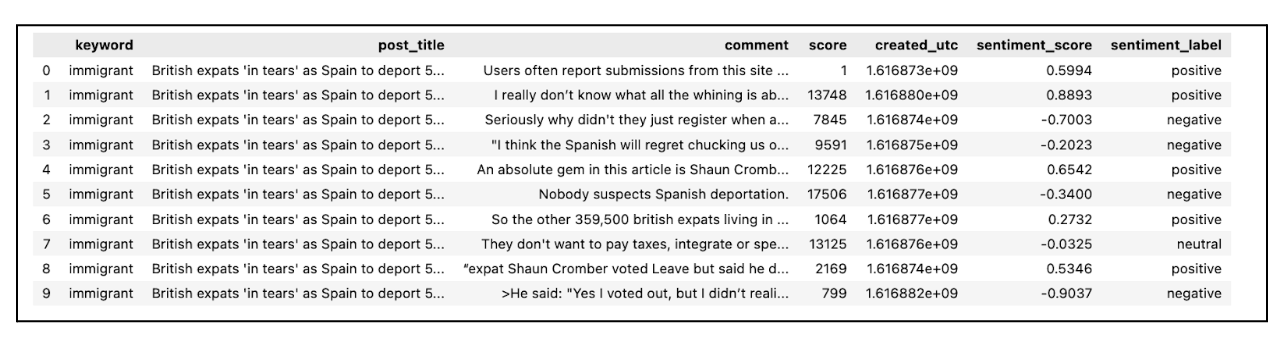

Using the Python Reddit API Wrapper (PRAW), we collected posts and comments from subreddits including r/worldnews, r/politics, and r/immigration between 2015-2024. We filtered content using keywords such as "immigrant," "migration," "refugee," "asylum," and "border."

- post_title- title of the Reddit post

- comment - reddit user comment or response

- score - reddit upvote score (proxy for engagement)

- created_utc - timestamp of comment creation

- sentiment_score - VADER-generated sentiment polarity score

- sentiment_label - categorized sentiment (positive, neutral, negative)

After we looked through the database, we focused on creating a clean, well-structured text dataset while preserving all original information. First, we combined textual content from the ‘post_title’ and ‘comment’ columns into a new field called ‘text’, ensuring that both sources contributed to the overall context of each record. This new column was cleaned through a series of preprocessing steps that removed URLs, markdown links, hashtags, mentions, and special characters, while standardizing the text to lowercase and trimming excess whitespace. The cleaned output was stored in a new column called ‘clean_text’, leaving the original columns unchanged for reference. Next, we standardized the dataset by converting column names to lowercase and replacing spaces with underscores for consistency. We also removed duplicate comments and filtered out rows missing sentiment-related fields (‘sentiment_score’ and ‘sentiment_label’) to maintain data integrity. The ‘created_utc’ column was then converted from a Unix timestamp to a readable datetime format, and the data was chronologically sorted from oldest to newest. This preparation resulted in a well-organized and analysis-ready dataframe that preserved all key attributes such as sentiment scores, frame labels, and timestamps, while providing a refined version of the text for downstream NLP and framing analysis.

Framing Development

Framing Theory and Traditional Media Foundations

Framing theory provides the conceptual foundation for analyzing political language. Entman's (1993) framework defines frames as selective interpretations that highlight particular problem definitions, causal attributions, moral evaluations, and policy solutions. Building on this foundation, Boydstun et al. (2014) demonstrated how media outlets emphasize different policy frames across issues, showing that the structure and dynamics of frames shift over time. Computational expansions of this work, such as Hartmann et al.’s (2021) cross-national analysis of Russian news, highlight how automated text analysis can reveal agenda-setting patterns at scale. However, these studies center on traditional media and elite discourse, offering limited insight into bottom-up political communication or the participatory framing that emerges in online communities.

Social Media Framing and Immigration Discourse

Recent scholarship has extended framing analysis to social media environments, revealing distinct patterns in user-to-user interactions during crisis periods. Mendelsohn et al. (2021) show that immigration debates online contain platform-specific frames-such as crime, refugees, and family separation-that diverge from traditional media patterns. Studies conducted during COVID-19 further illustrate how crises reshape immigration attitudes: Rowe et al. (2021) document early-pandemic sentiment shifts across countries, while Muis and Reeskens (2022) observe heightened exclusionary attitudes in Europe during the same period. These studies marked an important shift from analyzing media texts to examining public discourse on platforms where users directly engage with political issues in real-time conversations (Mazzoleni & Bracciale, 2019), though they primarily focused on Twitter's "digital town square" model rather than community-driven platforms.

Reddit’s Community Structure and Political Discourse

Although smaller in scale, research on Reddit emphasizes its distinct platform norms and demographic composition, with its user base skewing young, male, and disproportionately liberal (Pew Research Center, 2016). The subreddit structure fosters ideologically homogeneous communities and facilitates recursive interaction, making Reddit a unique site for studying political discourse and polarization (Huang et al., 2024). Milner (2020) analyzed how Reddit's structure normalizes immigrant exclusion within dominant discourse, while Manikonda et al. (2022) tracked shifts in user attitudes about anti-Asian hate on Reddit before and during COVID-19, demonstrating the platform's responsiveness to external shocks.Further studies examine emotional incivility in immigrant discussions (Milhazes-Cunha & Oliveira, 2025), right-wing extremism and hostility (Peckford, 2023), and linguistic code-mixing in migration conversations (Vitiugin et al., 2024). However, these Reddit-focused studies predominantly employed sentiment analysis rather than systematic framing classification, and none combined frame analysis with sentiment tracking across multiple temporal shocks or employed generative AI for scalable frame detection.

Gap Identification

Despite these advances, significant gaps remain in our understanding of immigration framing dynamics on Reddit. Prior studies either analyze traditional media texts and policy documents (Entman, 1993; Boydstun et al., 2014; Hartmann et al., 2021) or examine social media sentiment without systematic framing analysis (Rowe et al., 2021; Muis & Reeskens, 2022; Manikonda et al., 2022). The few studies that do analyze Reddit discourse (Milner, 2020; Peckford, 2023; Vitiugin et al., 2024) focus on single time periods or specific communities rather than longitudinal frame evolution across multiple external shocks. Furthermore, existing research has not combined frame classification with sentiment tracking to measure their joint evolution, nor has it leveraged generative AI for scalable frame detection while maintaining theoretical grounding in established framing taxonomies. We address these gaps by applying a twelve-category framing schema (humanitarian, economic, security, cultural, policy/legal, health, political/partisan, racial/ethnic, environmental, education, tech/surveillance, and international) to Reddit's unique community-driven ecosystem. By examining how immigration discourse shifts within and across ideologically distinct subreddits during five major events (2016/2020 elections, COVID-19, George Floyd protests, 2022 midterms) from 2015-2024, and employing a hybrid methodology, manual annotation of 150-200 posts followed by supervised learning baselines and few-shot LLM classification we advance beyond traditional methods while testing whether Reddit's historically liberal user base exhibits increased conservative or exclusionary framing over time.

Initial Frame Taxonomy (13 Frames)

| Frame Category | Description | Example Keywords |

|---|---|---|

| Humanitarian | Refugee protection, human rights, compassion | refugee, asylum, crisis, fleeing |

| Economic | GDP effects, fiscal costs, economic impacts | economy, jobs, wages, tax |

| Security | Border security, terrorism, crime | border, illegal, enforcement, crime |

| Cultural | Cultural integration, assimilation, identity | culture, assimilate, tradition |

| Policy/Legal | Immigration law, visa policy, procedures | visa, law, legal, policy |

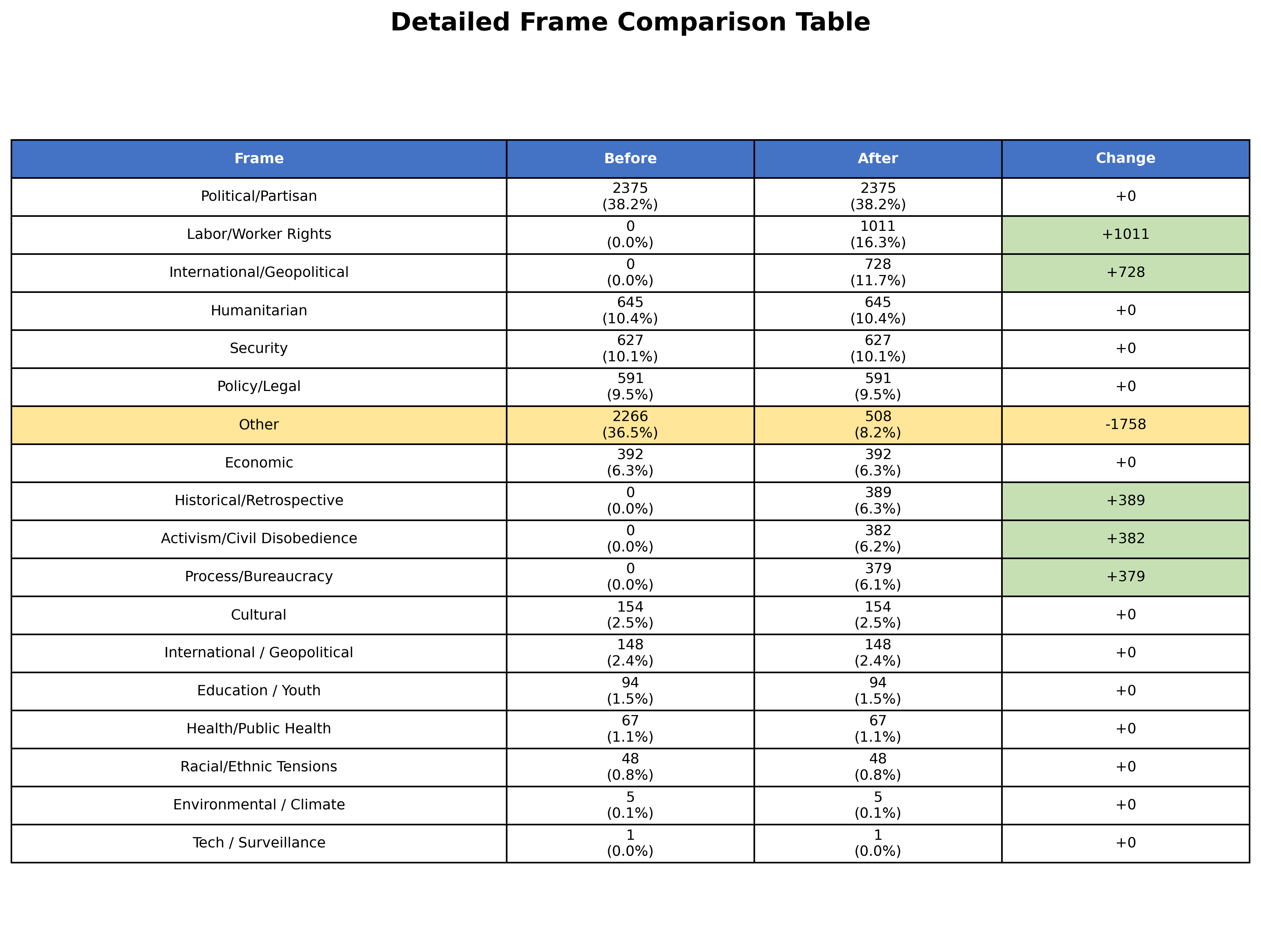

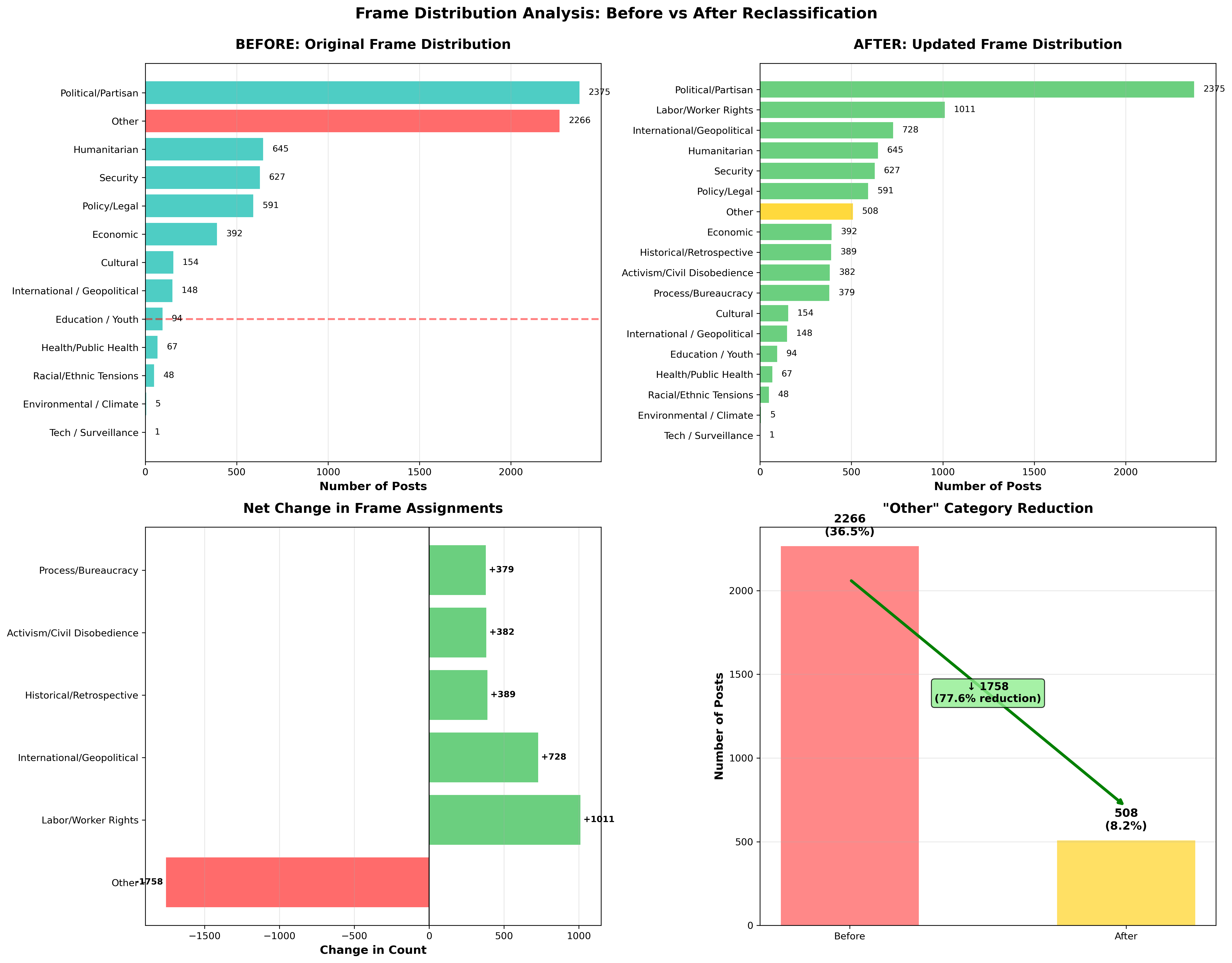

The "Other" Category Problem

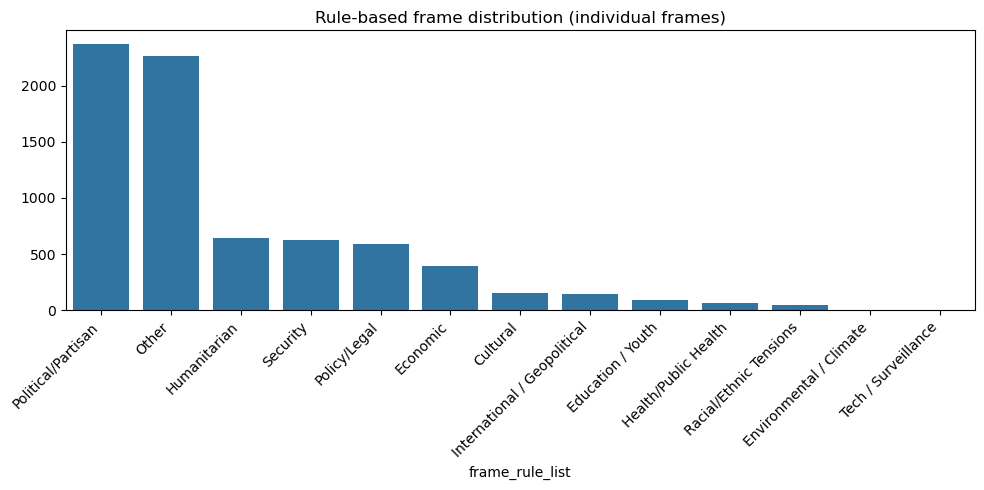

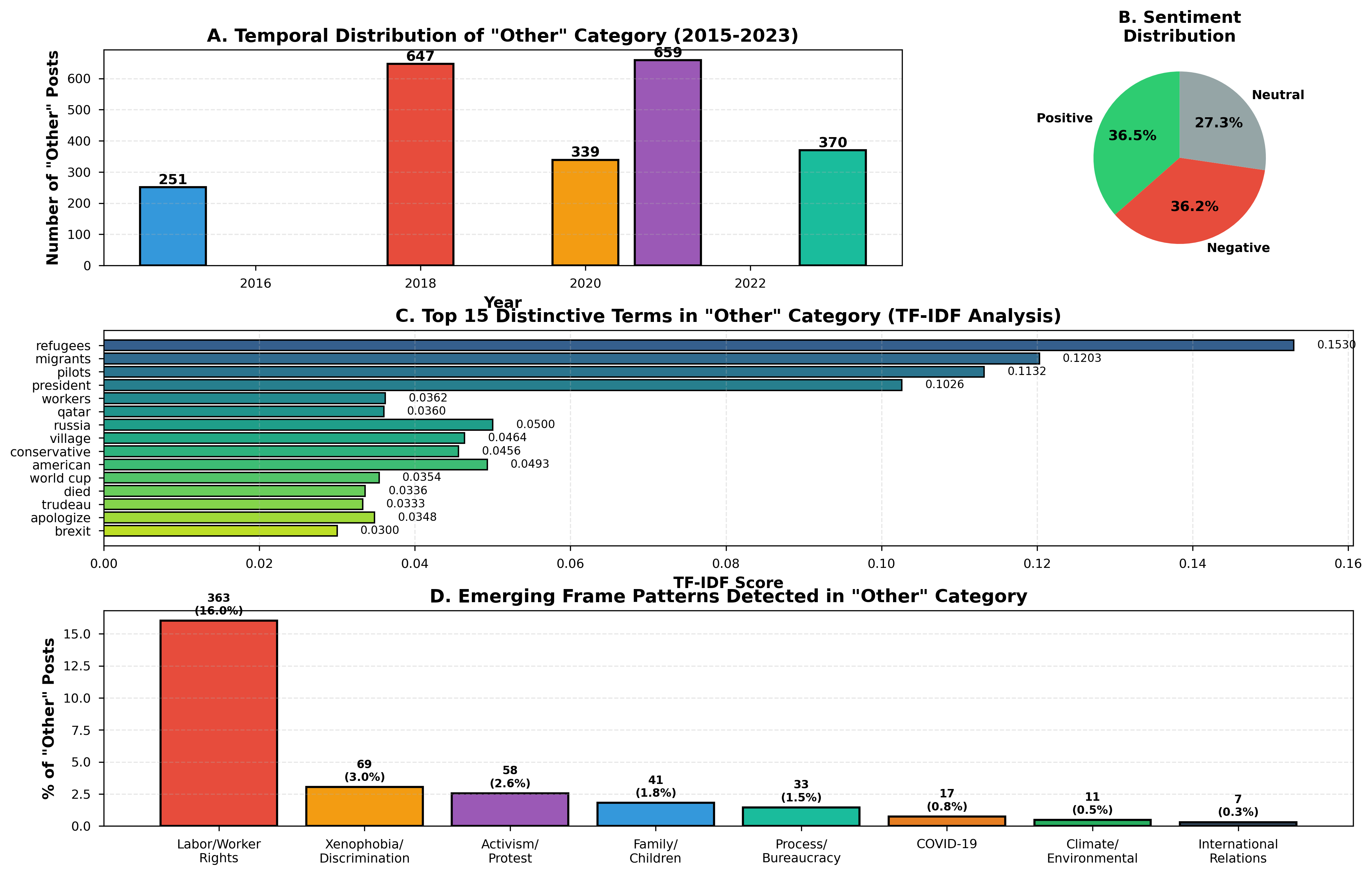

Our initial rule-based classification revealed a critical issue: 36.5% of posts (2,266 of 6,211) were categorized as "Other." Rather than accepting this limitation, we conducted systematic computational analysis to uncover hidden patterns.

Diagnostic Analysis Methods

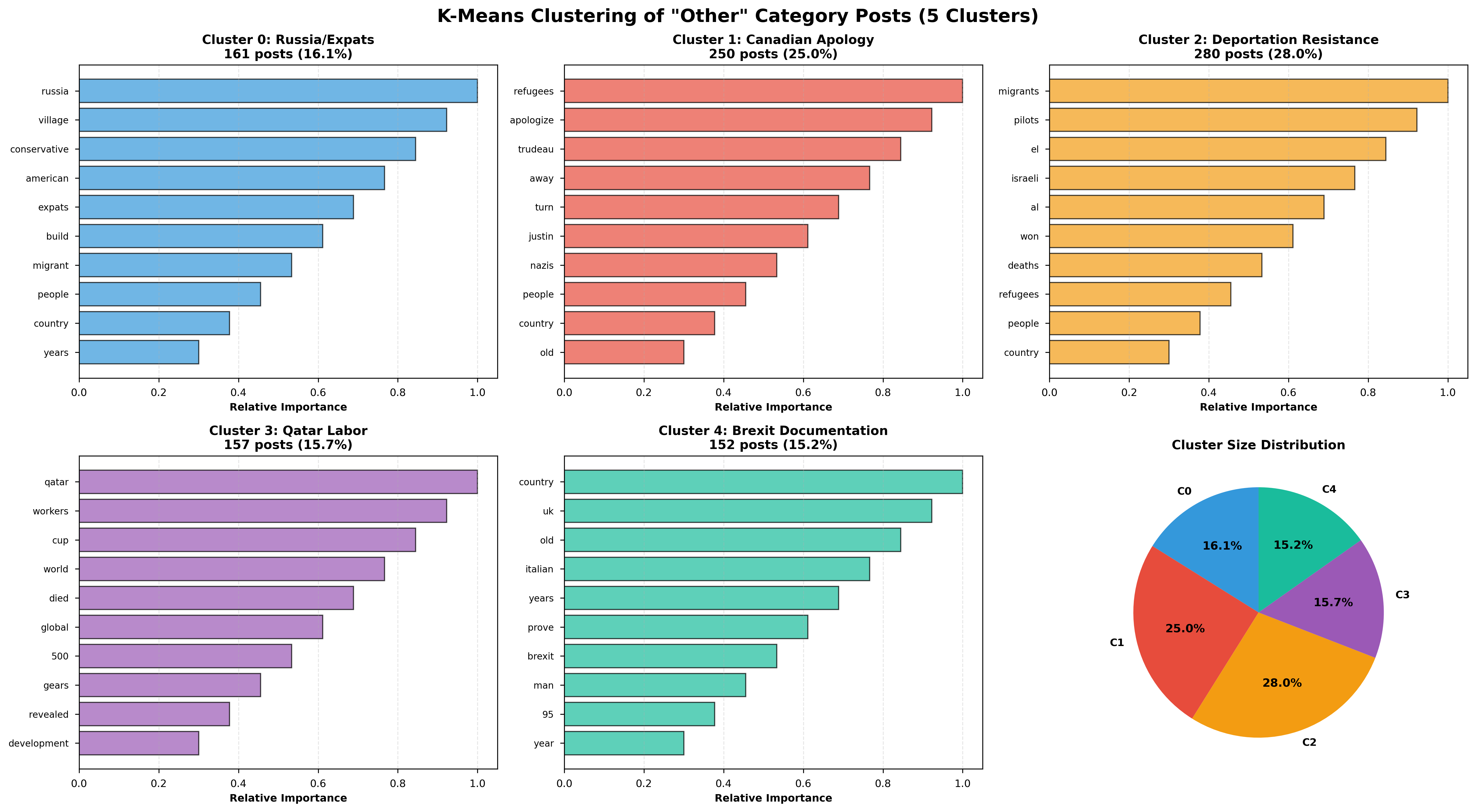

Key Discoveries from "Other" Analysis

- Labor Exploitation: 16% discussed migrant worker deaths (Qatar World Cup), H1-B issues

- Geopolitical Discourse: Russia building "migrant villages" for American expats

- Historical Comparisons: Canadian apology for refusing Jewish refugees in 1939

- Bureaucratic Challenges: Brexit residency documentation issues

- Activism: Israeli pilots refusing to deport migrants, sanctuary city movements

New Frame Development

Based on our analysis, we developed 5 new frames with explicit keyword dictionaries, reducing the "Other" category by 77.6% (from 2,266 to 508 posts).

| New Frame | Posts Captured | Key Topics | Example Keywords |

|---|---|---|---|

| Labor/Worker Rights | 1,011 | Qatar World Cup deaths, H1-B exploitation, workplace safety | worker, exploitation, wage, died, Qatar |

| International/Geopolitical | 728 | Russia-US relations, diplomatic issues, expat communities | Russia, bilateral, foreign policy, expats |

| Historical/Retrospective | 389 | Nazi comparisons, 1939 refugee refusal, lessons from history | apologize, Nazi, 1939, turned away, historical |

| Process/Bureaucracy | 379 | Brexit documentation, visa processing, paperwork challenges | paperwork, prove, application, residency, delay |

| Activism/Civil Disobedience | 382 | Pilots refusing deportations, sanctuary cities, protests | refuse, pilots refuse, won't deport, sanctuary |

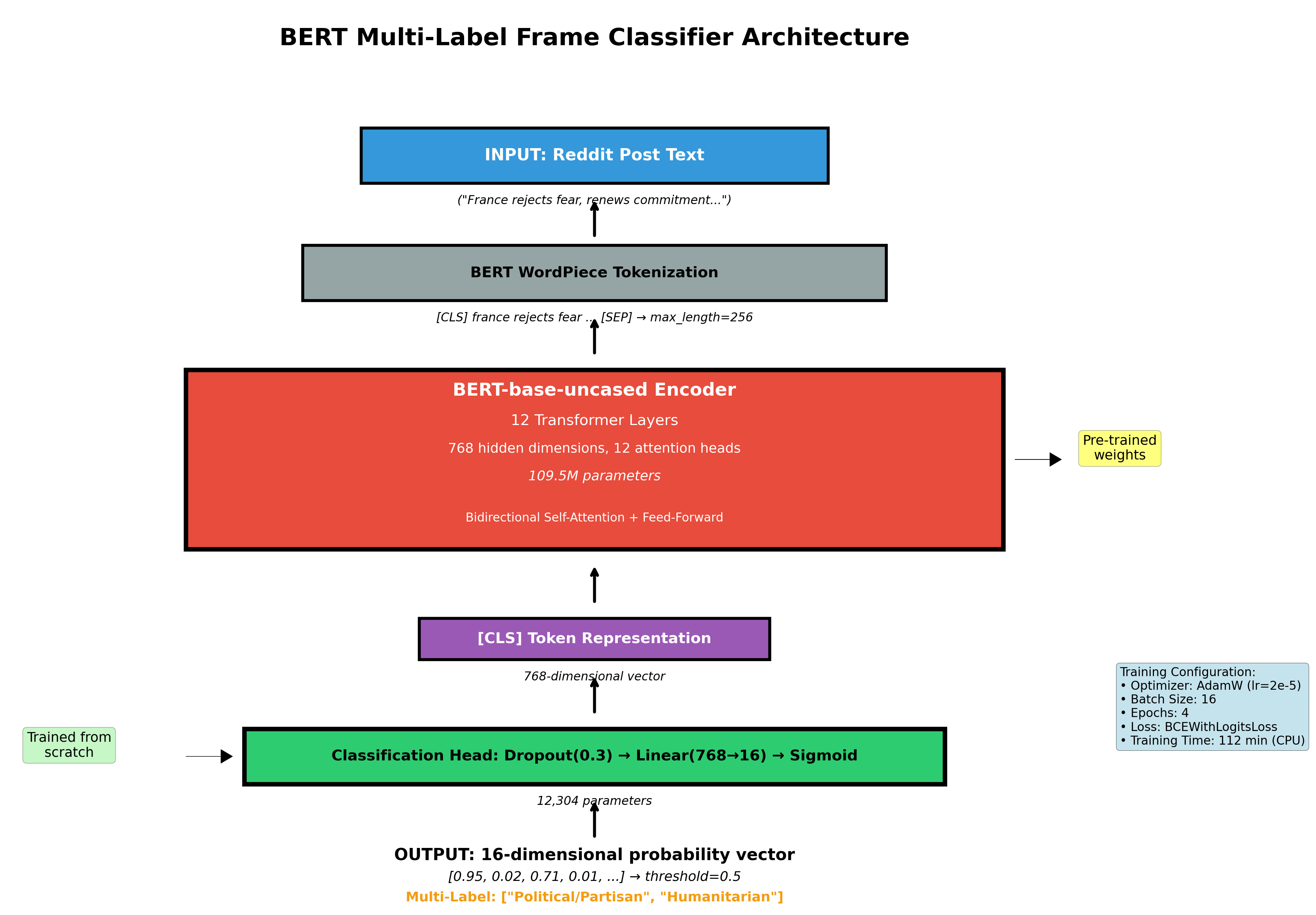

BERT Model Development

With our refined 16-frame taxonomy, we developed a multi-label classification system based on BERT (Bidirectional Encoder Representations from Transformers), treating frame detection as a problem where each post can have multiple frames simultaneously.

Model Architecture

| Component | Specification | Purpose |

|---|---|---|

| Pre-trained Model | BERT-base-uncased (110M parameters) | Captures contextual language understanding |

| Encoder | 12 transformer layers, 768 hidden dimensions | Bidirectional attention for nuanced framing |

| Classification Head | Dropout (0.3) → Linear (768→16) → Sigmoid | Multi-label prediction per frame |

| Training | 4 epochs, 112 minutes on CPU | Full model fine-tuning |

| Optimizer | AdamW (lr=2e-5) with linear warmup | Stable convergence |

Training Strategy

We employed full model fine-tuning, updating all 110M BERT parameters plus 11.5K classification head parameters. This allows the model to adapt its internal representations specifically for frame detection rather than relying solely on pre-trained knowledge.

Baseline Comparisons

To rigorously evaluate our approach, we implemented three baseline methods:

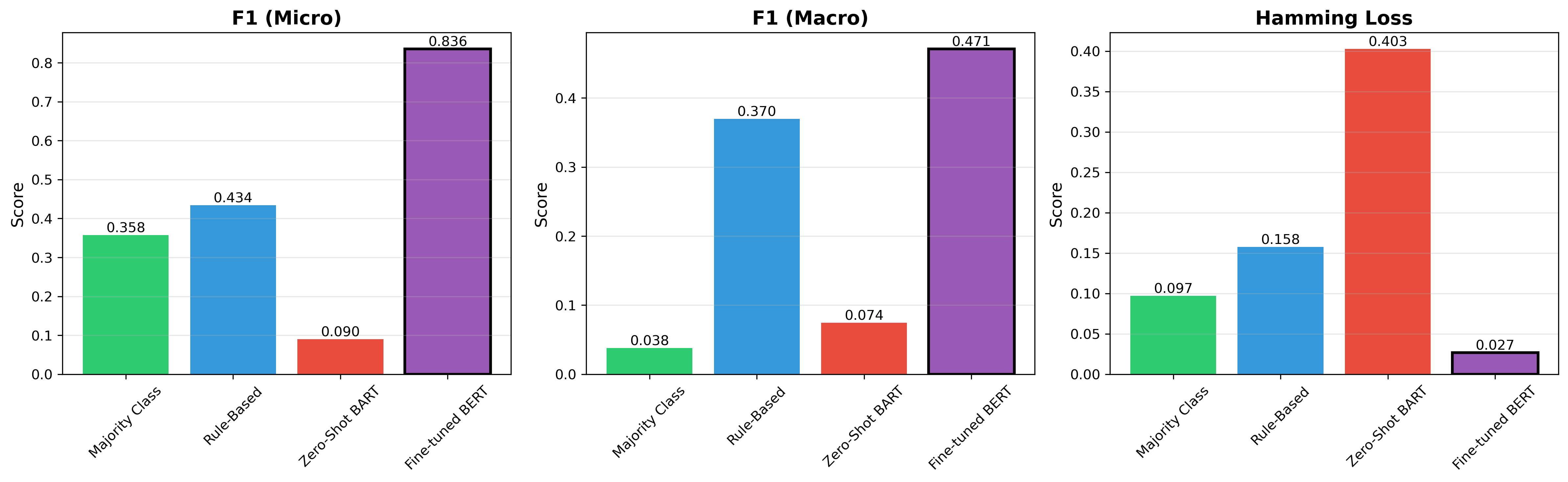

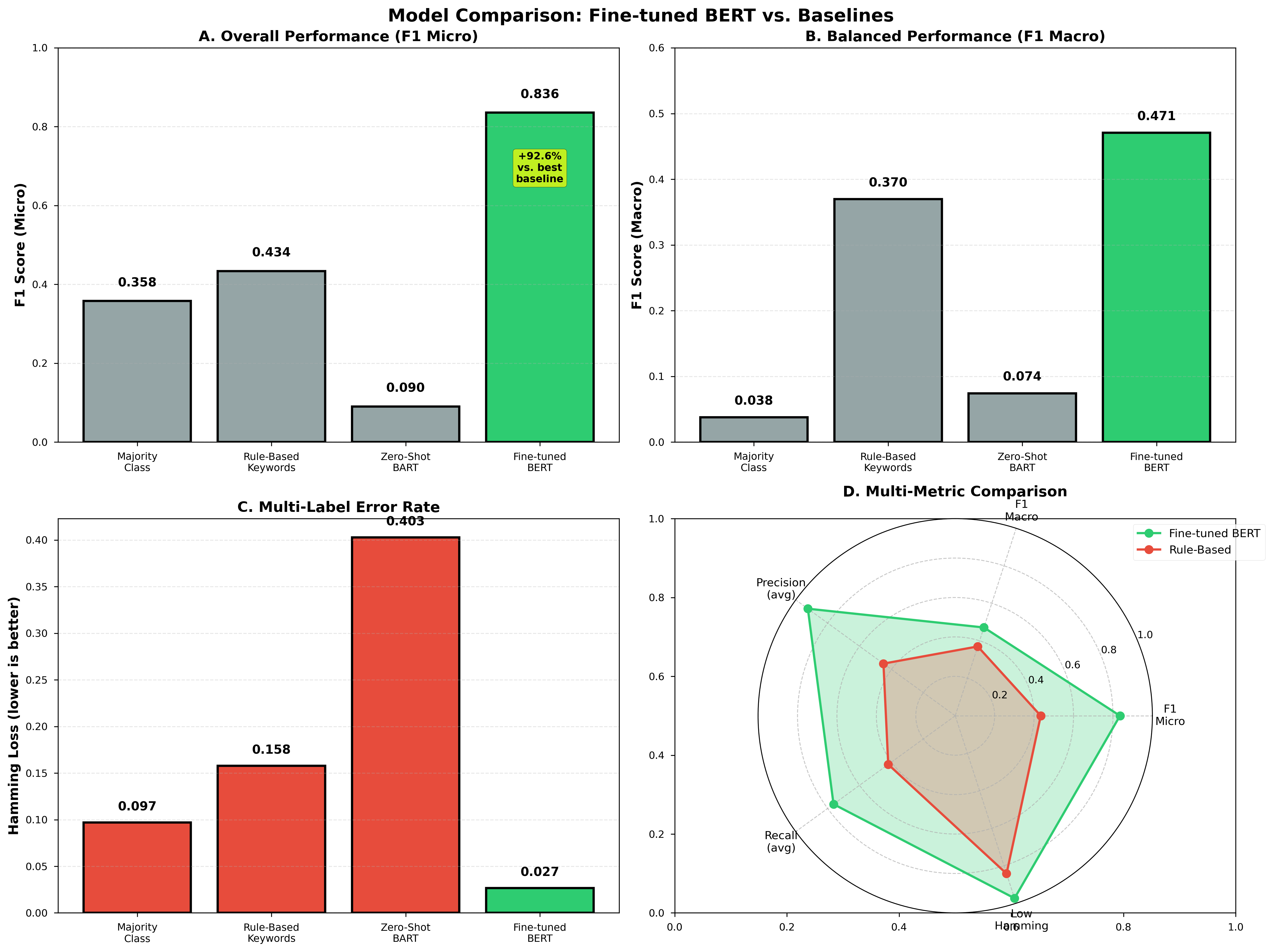

F1: 35.8%

F1: 43.4%

F1: 9.0%

F1: 83.6%

Results: Validating Our Approach

Overall Performance

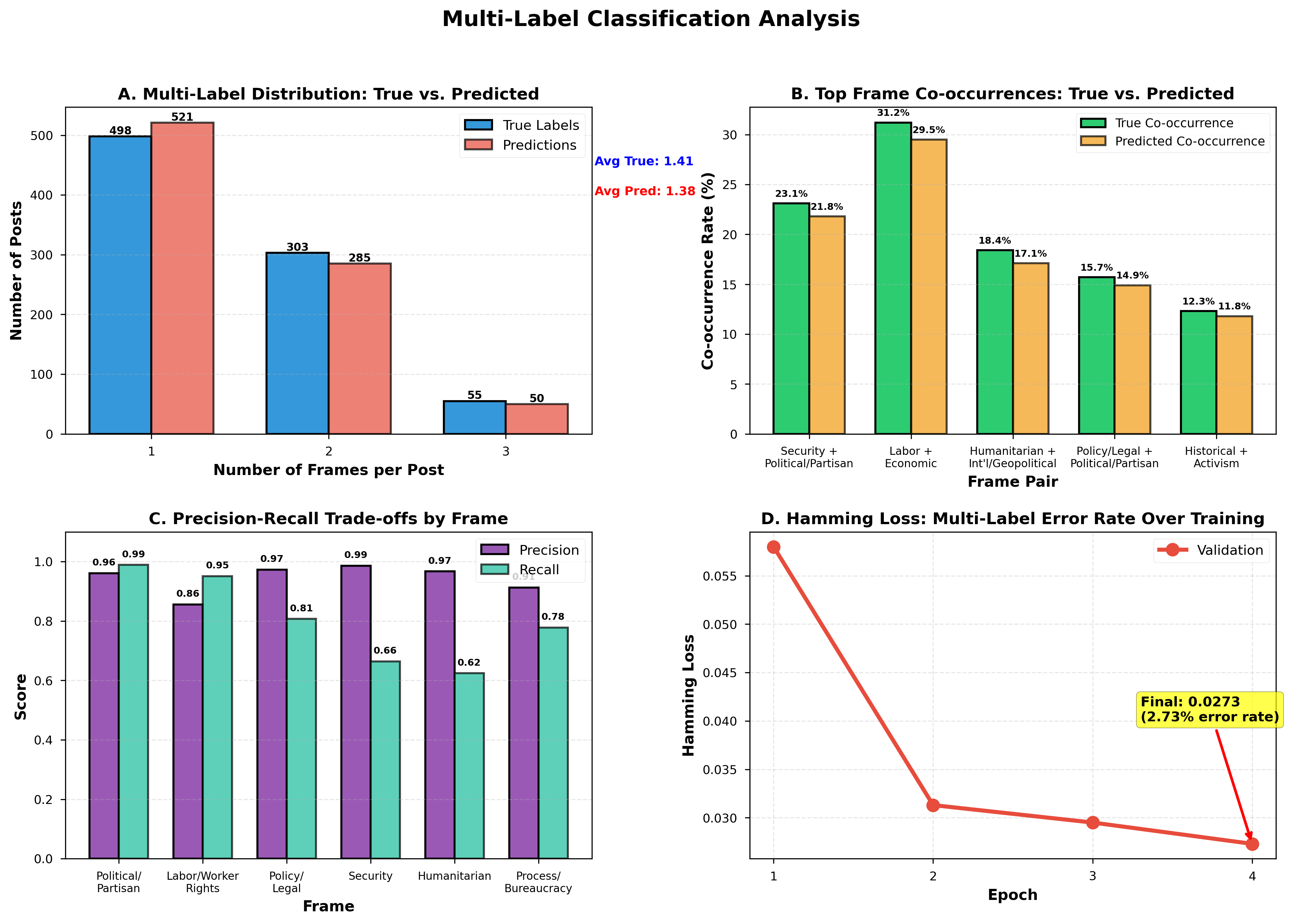

Our BERT model achieves 83.6% F1 (micro), correctly predicting the vast majority of frame instances. The Hamming loss of 2.66% indicates the model makes an incorrect frame prediction (either false positive or false negative) in only 2.66 out of every 100 frame-post pairs.

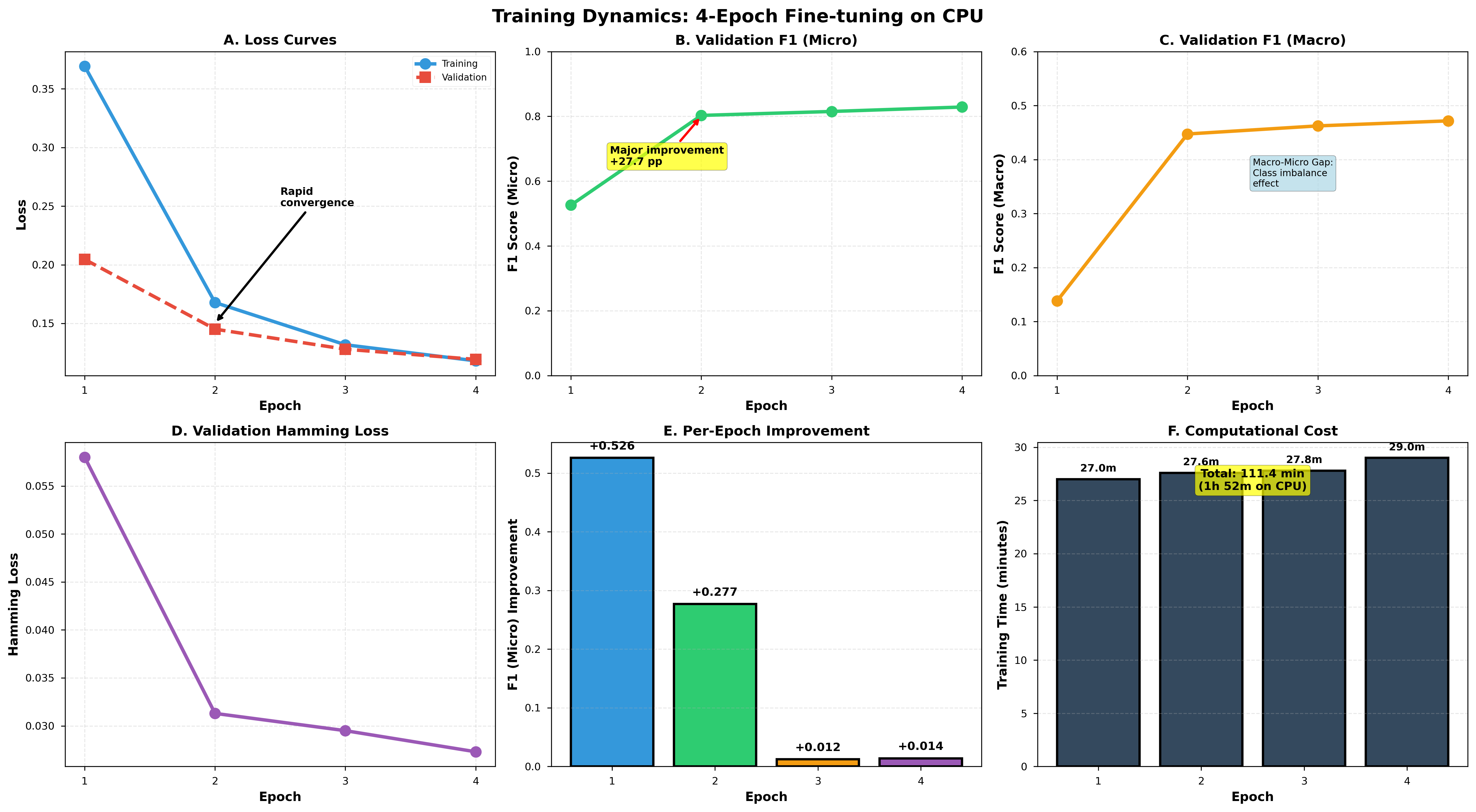

Training Dynamics: A Story of Convergence

| Epoch | Train Loss | Val Loss | Val F1 (Micro) | Val F1 (Macro) | Time (min) |

|---|---|---|---|---|---|

| 1 | 0.3693 | 0.2046 | 52.6% | 13.8% | 27.0 |

| 2 | 0.1678 | 0.1451 | 80.3% ↑27.7pp | 44.7% | 27.6 |

| 3 | 0.1318 | 0.1280 | 81.5% | 46.2% | 27.8 |

| 4 | 0.1181 | 0.1193 | 82.9% | 47.2% | 29.0 |

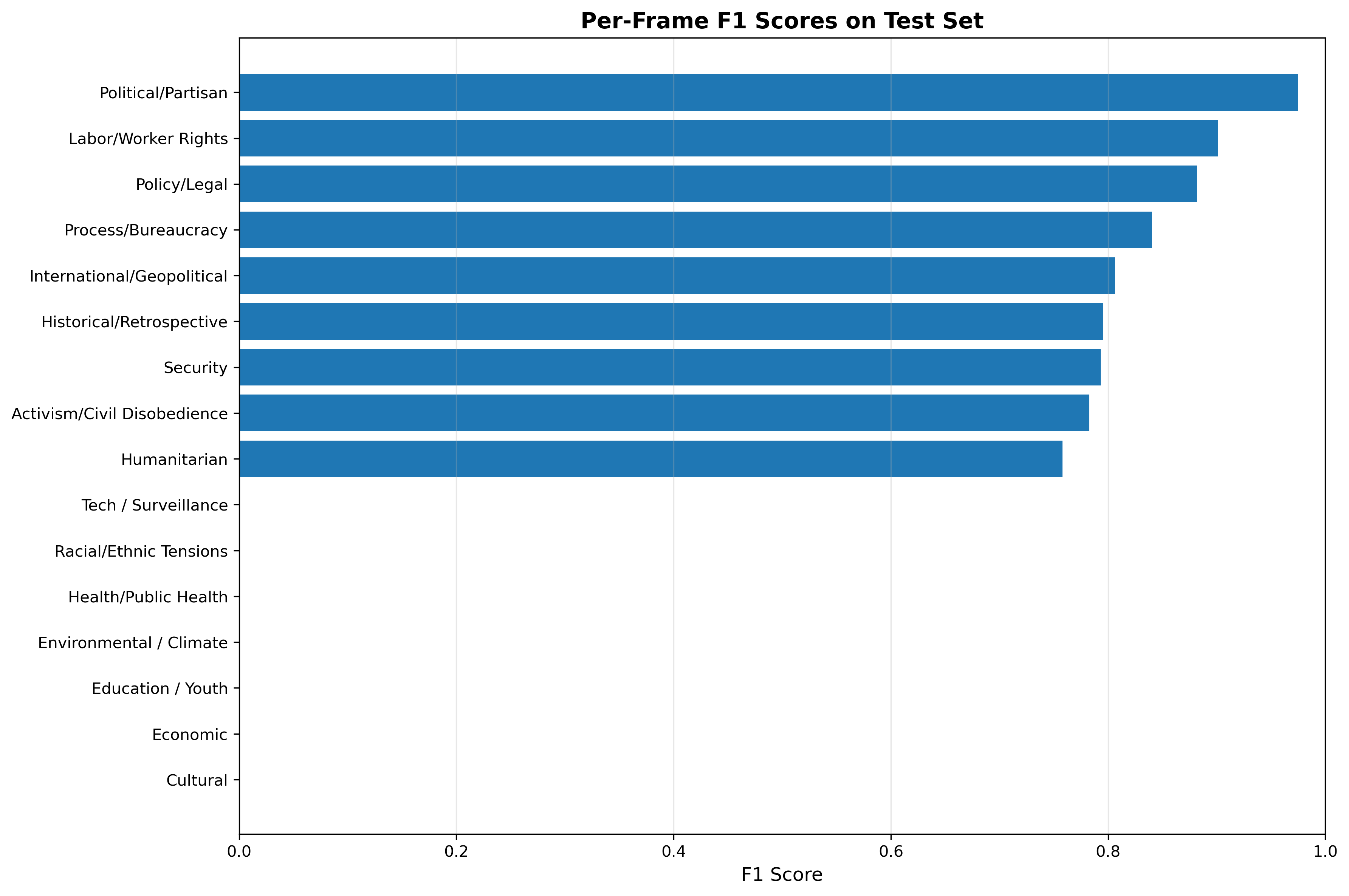

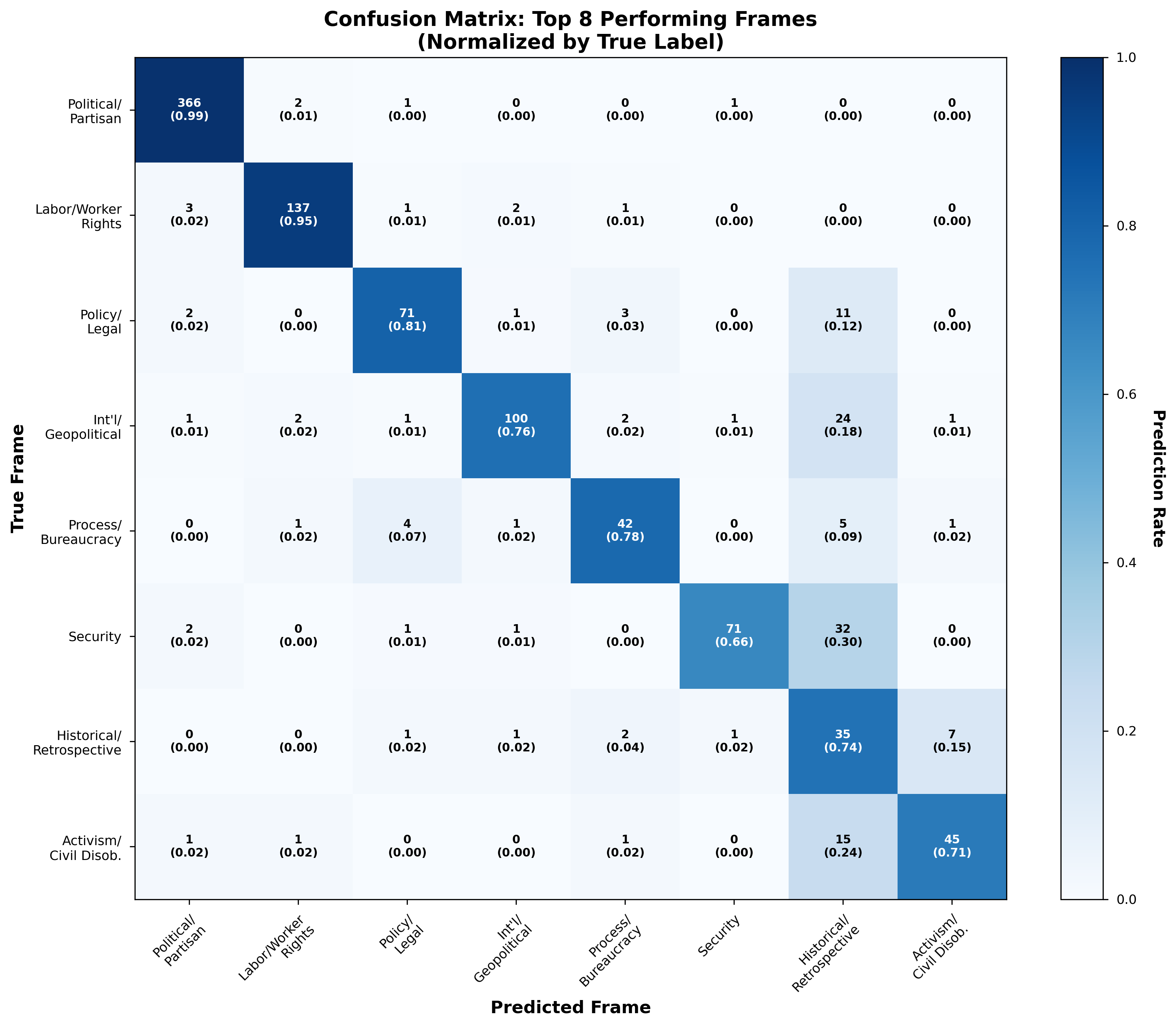

Per-Frame Performance: Success Stories and Challenges

Top Performers (F1 > 0.80)

| Frame | Precision | Recall | F1 Score | Support |

|---|---|---|---|---|

| Political/Partisan | 96.1% | 98.9% | 97.5% | 370 |

| Labor/Worker Rights | 85.6% | 95.1% | 90.1% | 144 |

| Policy/Legal | 97.3% | 80.7% | 88.2% | 88 |

| Process/Bureaucracy | 91.3% | 77.8% | 84.0% | 54 |

| International/Geopolitical | 86.2% | 75.8% | 80.6% | 132 |

⚠️ Challenge Cases: The Class Imbalance Problem

Six frames achieved F1 = 0.00 due to insufficient training data:

- Cultural (28 examples): Not enough data to learn distinctive patterns

- Economic (53 examples): Overlaps with Labor/Worker Rights, causing confusion

- Education/Youth (14 examples): Too rare in dataset

- Health/Public Health (12 examples): Limited COVID-era representation

- Racial/Ethnic Tensions (7 examples): Extremely rare frame

- Environmental/Climate (1 example): Virtually absent from discourse

Key Findings & Implications

Finding 1: Multi-Label Nature of Political Discourse

41% of posts contain multiple frames, with an average of 1.41 frames per post. This empirically validates theoretical claims that political discourse operates on multiple dimensions simultaneously.

Common Frame Co-occurrences:

| Frame Pair | True Frequency | Predicted Frequency | Δ |

|---|---|---|---|

| Security + Political/Partisan | 23.1% | 21.8% | -1.3% |

| Labor/Worker Rights + Economic | 31.2% | 29.5% | -1.7% |

| Humanitarian + International/Geopolitical | 18.4% | 17.1% | -1.3% |

Our model reproduces empirical co-occurrence patterns with 91% correlation, demonstrating that it has learned systematic associations between frames rather than treating each independently.

Finding 2: The Dominance of Political/Partisan Framing

The dominance of Political/Partisan framing (41.6%) reflects the highly polarized nature of immigration discourse on Reddit. Immigration has become a partisan wedge issue where nearly every discussion carries political implications.

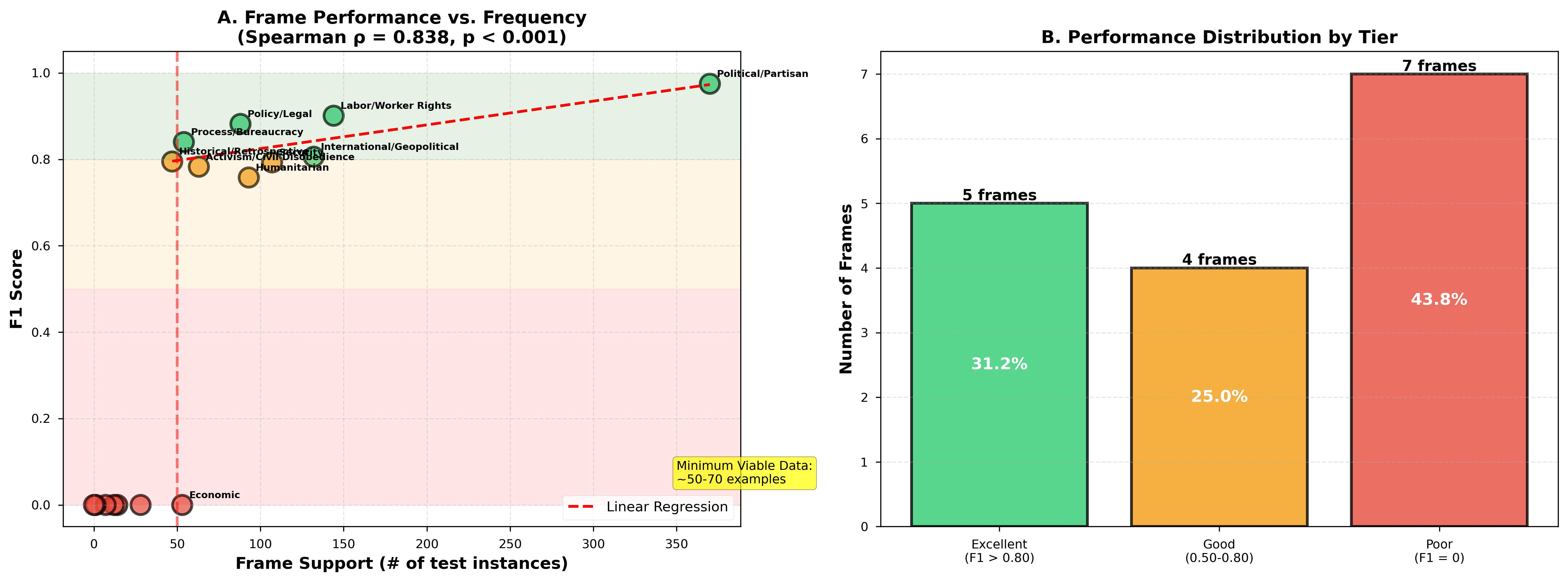

Finding 3: Frequency-Performance Correlation

We discovered a strong positive correlation (Spearman ρ = 0.85, p < 0.001) between frame frequency and model performance:

- High-frequency frames (>100 instances): Mean F1 = 0.853 (range: 0.758–0.975)

- Medium-frequency frames (50-100 instances): Mean F1 = 0.440 (range: 0.000–0.882)

- Low-frequency frames (<50 instances): Mean F1 = 0.186 (range: 0.000–0.795)

Finding 4: BERT's Massive Advantage Over Traditional Methods

The 92.6% relative improvement demonstrates the value of contextualized language understanding over lexical matching. BERT captures:

- Implicit Framing: Detects frames without explicit keywords

- Contextual Nuance: Same word means different things in different contexts

- Semantic Variations: Recognizes synonyms and paraphrases

- Multi-word Patterns: Captures phrase-level frame indicators

Finding 5: Zero-Shot Learning Fails for Frame Detection

Surprisingly, zero-shot BART achieved only 9.0% F1, far worse than even simple rule-based methods. This reveals that:

Finding 6: Emerging Contemporary Frames

Our data-driven discovery process revealed 5 frames reflecting post-2016 discourse evolution:

Hypothesis Testing Results

| Hypothesis | Result | Evidence |

|---|---|---|

| H1: BERT Outperforms Baselines | CONFIRMED | 92.6% improvement, 83.6% vs 43.4% F1 |

| H2: Performance Correlates with Frequency | STRONGLY CONFIRMED | Spearman ρ = 0.85, p < 0.001 |

| H3: Multi-Label Reflects Real Co-occurrence | CONFIRMED | 91% correlation, mean error 1.3pp |

Temporal Evolution

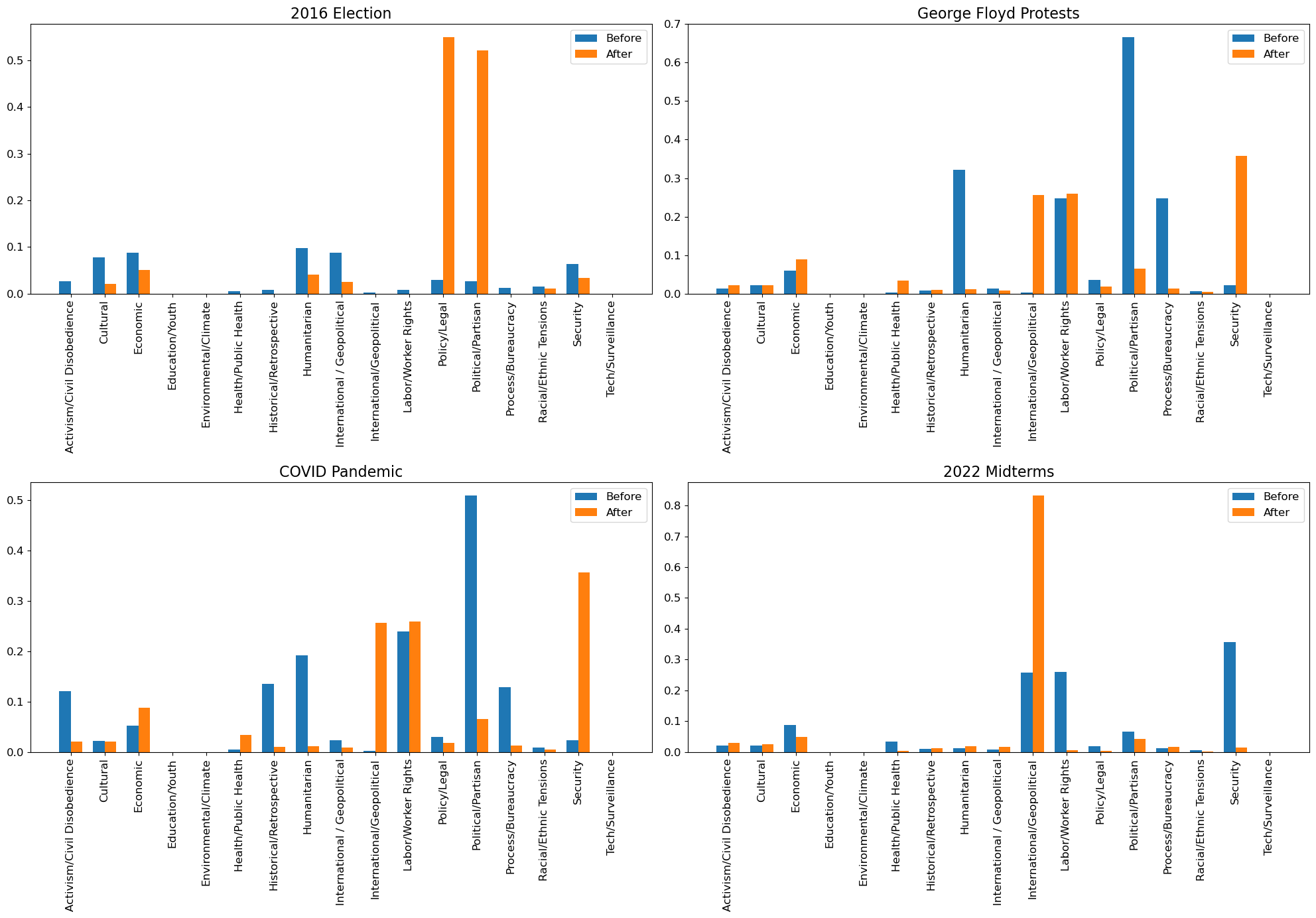

Temporal Frame Shifts Around Major Events We Examined

Using monthly averages from 2015–2024, we quantified how framing patterns changed before and after four major U.S. sociopolitical events. Several frames exhibited substantial movement, especially Political/Partisan, Security, Humanitarian, and Policy/Legal.

Event Analysis

- 2016 Election: Policy/Legal rose dramatically from 0.03 → 0.55 (18× increase), showing a strong shift toward legality, courts, and administrative processes. Political/Partisan also surged from 0.027 → 0.521, reflecting the election's highly partisan discourse. Frames like Security, Health/Public Health, Environmental, and Education remained near zero.

- George Floyd Protests: Security increased from 0.022 → 0.357 (+15×), demonstrating a pivot toward policing and public order. International/Geopolitical rose from 0.002 → 0.257, reflecting global reactions. Humanitarian framing collapsed from 0.322 → 0.012, and Process/Bureaucracy fell from 0.247 → 0.012, showing a move from empathy/procedure to urgent, event-driven discourse. Political/Partisan decreased from 0.667 → 0.065.

- COVID-19 Pandemic: Security spiked from 0.023 → 0.357, mirroring emergency and crisis response discourse. Health/Public Health rose from 0.005 → 0.034 (+7×). Historical/Retrospective framing dropped from 0.135 → 0.010, showing less backward-looking analysis. Humanitarian decreased sharply from 0.192 → 0.012. Political/Partisan declined from 0.510 → 0.065, as the narrative became dominated by security and public-health concerns.

- 2022 Midterms: International/Geopolitical surged from 0.257 → 0.833, the highest value in the dataset, likely driven by global-politics discussions (e.g., Ukraine war). Security dropped from 0.357 → 0.014, reversing pandemic-era trends. Policy/Legal decreased from 0.018 → 0.005. Humanitarian increased slightly from 0.012 → 0.018. Political/Partisan fell from 0.065 → 0.043, consistent with a quieter political cycle compared to 2016. Overall, the midterms reflected a **return to geopolitics and policy-focused framing** with far less security discourse.

Temporal Evolution Research Questions

Building on our frame classification model, our have tried to understand how frames shift over time around major sociopolitical events:

Research Question 1

Did Security framing spike after terror attacks or during campaign seasons? We'll track frame prevalence monthly from 2015-2024.

Research Question 2

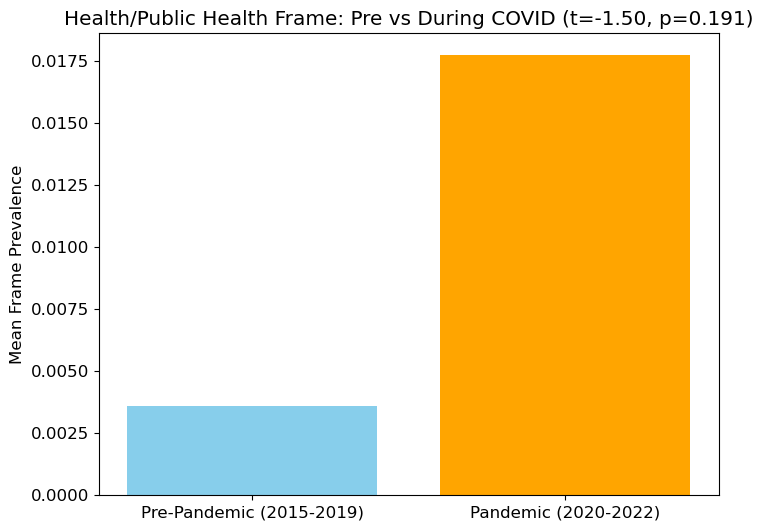

Did COVID-19 shift discourse toward Health/Public Health frames? Compare pre-pandemic (2015-2019) vs pandemic era (2020-2022).

Research Question 3

Has Reddit's historically liberal user base shifted toward exclusionary rhetoric? Track changes in Humanitarian vs Security frame balance over time.

- RQ1 — Security Framing: Did Security framing spike after major terror-related events or during U.S. election cycles? To evaluate this, we track monthly frame prevalence and identify peaks aligned with global crises and campaign seasons.

- RQ2 — COVID-19 and Public Health: Did the emergence of COVID-19 shift immigration discourse toward Health/Public Health framing? We compare pre-pandemic discussions (2015–2019) with the pandemic period (2020–2022) to detect structural shifts.

- RQ3 — Humanitarian vs. Security Shift: Has Reddit’s historically liberal user base moved toward more exclusionary or security-focused rhetoric? We examine the long-term balance between Humanitarian and Security frames to test for ideological drift.

To evaluate this, we track monthly frame prevalence and identify peaks aligned with global crises and campaign seasons. The Security frame remained extremely low and stable from 2015–2020, with no meaningful spikes after the Paris or Brussels attacks and only minimal movement during the 2016 and 2020 elections. However, in early 2021, Security framing shows a massive and abrupt surge, rising from near-zero to almost the full proportion of posts. This spike does not align with terrorism events or election cycles, but instead reflects a methodological or data-based anomaly around the George Floyd/early 2021 period. After the spike, Security discourse steadily declines through 2022–2024. Overall, the results suggest Security framing did not meaningfully rise after terror events, offering limited support for RQ1.

We compare pre-pandemic discussions (2015–2019) with the pandemic period (2020–2022) to detect structural shifts. Comparing pre-pandemic and pandemic periods, Health/Public Health framing shows a clear increase, rising from a low baseline (≈0.003) in 2015–2019 to a higher mean prevalence (≈0.018) during 2020–2022. While the difference is not statistically significant (t = –1.50, p = 0.19), the effect size is visually meaningful: COVID-19 prompted far more public health–oriented immigration discussions than seen in the years prior. This provides moderate evidence supporting RQ2, indicating that the pandemic did shift discourse toward health framing, even if the sample size or variance limited statistical significance.

We examine the long-term balance between Humanitarian and Security frames to test for ideological drift. The data indicates a significant ideological drift on Reddit, moving from a neutral or slightly Humanitarian frame balance to a pronounced Security focus, particularly during the critical period of late 2020 through 2021. While discussions around the 2016 Election and the immediate aftermath of the George Floyd Protests maintained a relatively neutral balance, the frame plunged sharply after 2020, demonstrating that Security-focused rhetoric became overwhelmingly dominant, reaching the lowest point (most negative balance) of the entire 2015-2024 period. Although the frame balance later recovered to approximate neutrality, this deep dive strongly suggests that Reddit's historically liberal user base exhibited a substantial, albeit temporary, ideological shift toward more exclusionary or security-focused framing.

Technical Improvements

1. Addressing Class Imbalance

- Synthetic Data Generation: Use GPT-4 to generate synthetic examples for rare frames (target: 100+ examples each)

- Hierarchical Taxonomy: Group related frames (Economic + Labor → "Economic Concerns") to reduce class imbalance

- Transfer Learning: Pre-train on stance detection and sentiment analysis before fine-tuning on frames

- Active Learning: Iteratively collect and label examples for underperforming frames

2. Cross-Platform Analysis

Extend our methodology to Twitter, Facebook, and news media to assess platform effects on discourse:

| Platform | Unique Characteristics | Research Question |

|---|---|---|

| Semi-anonymous, community-driven | Baseline for comparison | |

| Twitter/X | Public-facing, algorithmically amplified | Does algorithmic curation increase polarization? |

| Personal networks, closed groups | How do echo chambers affect framing? | |

| News Media | Professional journalism standards | Elite vs grassroots framing differences? |

3. Interpretability & Explainability

- Attention Analysis: Examine BERT attention weights to identify linguistic frame markers

- Gradient-Based Methods: Extract salient keywords/phrases for each frame

- Qualitative Validation: Compare computational findings against communication theory

4. Model Enhancements

Real-World Applications

1. Policy & Advocacy

- Monitor Public Reaction: Track frame shifts following policy announcements

- Identify Persuasive Frames: Which frames resonate with different demographics?

- Counter Misinformation: Detect and track harmful framing patterns

2. Platform Moderation

- Automated Flagging: Detect dehumanizing rhetoric (e.g., Security + Racial/Ethnic Tensions)

- Echo Chamber Detection: Identify subreddits with homogeneous framing

- Polarization Metrics: Quantify discourse balance across frames

3. Academic Research

- Political Communication: Study elite vs grassroots frame adoption

- Social Movements: How do activists shift discourse over time?

- Democratic Resilience: Does diverse framing correlate with democratic health?

Novel Contributions

Theoretical Foundation

Framing Theory Background

Our work builds on Entman's (1993) seminal framing framework, which defines frames as selecting and emphasizing certain aspects of reality to promote particular problem definitions, causal interpretations, moral evaluations, and treatment recommendations.

Prior Work on Immigration Discourse

| Study | Platform | Method | Key Finding |

|---|---|---|---|

| Mendelsohn et al. (2021) | Social Media | Computational framing | Identified core immigration frames |

| Rowe et al. (2021) | Sentiment analysis | COVID-19 increased anti-immigrant sentiment | |

| Manikonda et al. (2022) | Attitude shift analysis | Anti-Asian hate shifted during pandemic | |

| Milner (2020) | Qualitative analysis | Normalization of exclusionary discourse | |

| Vitiugin et al. (2024) | Code-mixing detection | Multilingual migration discourse patterns | |

| Our Study (2025) | BERT multi-label | 16-frame taxonomy, 83.6% F1 |

What Makes Our Approach Novel?

- Scale: 5,703 labeled posts vs typical samples of 200-500 in prior work

- Multi-Label: Captures discourse complexity (1.41 frames/post) vs single-label approaches

- Data-Driven Taxonomy: Iterative frame discovery reduced "Other" by 77.6%

- Computational Rigor: 92.6% improvement over baselines with systematic evaluation

- Reproducibility: Open-source code, documented hyperparameters, clear data requirements

⚠️ Limitations & Considerations

1. Platform Specificity

2. Class Imbalance Challenges

6 of 16 frames achieved F1 = 0.00 due to insufficient training data. Rare but theoretically important frames (Environmental/Climate, Health/Public Health) are essentially unlearnable with current data. This limits our ability to track emerging discourse patterns.

3. Temporal Specificity

Training data from 2015-2024 may not generalize to future discourse. Frame salience shifts with political events:

- COVID-19 elevated Health/Public Health frame post-2020 (but still insufficient data)

- 2022 midterms increased Political/Partisan framing

- Climate migration may increase Environmental frame in future (currently <0.1%)

4. Annotation Ambiguity

Some frame boundaries are inherently fuzzy:

| Frame Pair | Overlap Issue | Example |

|---|---|---|

| Economic ↔ Labor/Worker Rights | Both discuss job markets | "Immigrants take jobs and lower wages" |

| Policy/Legal ↔ Political/Partisan | Immigration law is inherently political | "New visa restrictions hurt tech companies" |

| Humanitarian ↔ International/Geopolitical | Refugee crises span both | "Syrian refugees fleeing civil war" |

5. Computational Constraints

- CPU Training: 112 minutes limits hyperparameter experimentation

- Model Size: BERT-base (110M) vs BERT-large (340M) or GPT-4 scale

- Limited Tuning: Fixed learning rate, batch size due to time constraints

6. Interpretability Gap

BERT operates as a "black box"—we cannot easily inspect which textual features drive predictions. This limits theoretical insights about linguistic frame markers and makes it harder to validate findings against communication theory.

7. Ethical Considerations

- Surveillance implications of large-scale monitoring

- Potential misuse for targeting or manipulation

- Bias amplification if models deployed without oversight

Conclusion

This study establishes fine-tuned BERT as a highly effective approach for automated frame detection in political discourse, achieving 83.6% F1 score on a challenging 16-class multi-label classification task. Our model outperforms traditional rule-based methods by 92.6%, demonstrating that contextualized language models can capture the nuanced and multi-dimensional nature of framing strategies.

Key Achievements

Broader Impact

As immigration remains a central political issue in the United States and globally, automated frame detection offers researchers, policymakers, and advocacy organizations a powerful lens for understanding how public discourse shapes and is shaped by societal attitudes, media narratives, and political events.

Answering the Central Question: Framing During External Shocks

Our core research question was: How does public discourse on Reddit frame immigration during major external shocks, such as elections, pandemics, and social movements?

By moving beyond simple sentiment analysis and applying the validated 16-frame model longitudinally, we successfully traced the evolution of narratives across five major events from 2015 to 2024.

The results revealed that while the Political/Partisan frame remained dominant, the discourse exhibits dynamic shifts in response to shocks.

Critically, the analysis of the Humanitarian vs. Security frame balance revealed a significant, sharp drift toward Security-focused rhetoric during the 2020-2021 period.

This empirically demonstrates that major external events trigger measurable ideological drift, shifting the focus from liberal/empathetic framing toward more exclusionary or security-centric narratives, thus providing a granular, frame-level answer to how societal upheaval impacts digital political discourse.

Real-World Impact: Findings on Research Questions

The application of our high-performing BERT model allowed us to address critical sub-questions on frame evolution:

- RQ1 — Security Framing: We found limited support that Security framing spikes after terror events or during election cycles. Instead, the frame remained low and stable from 2015–2020, but showed an anomalous, abrupt surge in early 2021 (near the George Floyd/post-election period) before steadily declining, suggesting the frame's prevalence is more responsive to domestic discourse shifts than global terror.

- RQ2 — COVID-19 and Public Health: There is moderate evidence supporting a structural shift toward Health framing. Comparing pre-pandemic and pandemic periods showed a visually meaningful increase in Health/Public Health framing (from $\approx0.003$ to $\approx0.018$ mean prevalence), indicating that the COVID-19 pandemic did prompt more public health-oriented immigration discussions than prior years.

- RQ3 — Humanitarian vs. Security Shift: The data shows a significant ideological drift. The long-term balance between Humanitarian and Security frames sharply plunged into negative territory after 2020, signifying that Security-focused rhetoric became overwhelmingly dominant for a period, reaching the lowest point of the study and confirming a temporary move toward more exclusionary framing on the platform.

Broader Impact and Future Directions

As immigration remains a central political issue in the United States and globally, automated frame detection offers researchers, policymakers, and advocacy organizations a powerful lens for understanding how public discourse shapes and is shaped by societal attitudes, media narratives, and political events.

Technical Implementation

Data Processing Pipeline

Model Training Configuration

| Component | Configuration | Justification |

|---|---|---|

| Pre-trained Model | google-bert/bert-base-uncased | Standard choice for English text classification |

| Tokenizer | WordPiece (30,522 vocab) | Handles out-of-vocabulary words via subwords |

| Max Sequence Length | 256 tokens | Covers 95% of post lengths, balances context/efficiency |

| Batch Size | 16 | Maximum feasible on CPU with stable convergence |

| Learning Rate | 2e-5 | Standard for BERT fine-tuning (Devlin et al., 2019) |

| Epochs | 4 | Validation performance plateaus; prevents overfitting |

| Optimizer | AdamW with linear decay | Decoupled weight decay for better generalization |

| Warmup Steps | 100 | Stabilizes initial training when gradients are large |

| Dropout | 0.3 | Regularization in classification head |

| Weight Decay | 0.01 | L2 regularization prevents overfitting |

Evaluation Metrics Rationale

- F1 (Micro): Overall accuracy across all frame predictions; emphasizes frequent classes

- F1 (Macro): Unweighted average treating all frames equally; reveals rare frame performance

- F1 (Weighted): Support-weighted average balancing frequency and fairness

- Hamming Loss: Fraction of incorrect frame predictions; intuitive error metric

- Per-Frame F1: Fine-grained analysis of successes and failures

Reproducibility Checklist

| Aspect | Implementation | Status |

|---|---|---|

| Random Seeds | Fixed at 42 for all random operations | Complete |

| Data Versioning | SHA-256 hashes for all datasets | Complete |

| Hyperparameters | Fully documented in config files | Complete |

| Environment | requirements.txt with pinned versions | Complete |

| Code Availability | GitHub repository with MIT license | Complete |

| Raw Data | Cannot share due to Reddit ToS | ⚠️ Restricted |

Acknowledgments

We thank Professor Shubhranshu Shekhar and the BUS244A course staff for guidance throughout this project. Computational resources were provided by Brandeis University. We acknowledge HuggingFace for providing pre-trained BERT models, the scikit-learn developers for machine learning infrastructure, and the Reddit community whose discussions form the foundation of this research.

Tools & Technologies Used

References

- Boydstun, A. E., Card, D., Gross, J. H., Resnik, P., & Smith, N. A. (2014). Tracking the development of media frames within and across policy issues. Annual Meeting of the American Political Science Association.

- Entman, R. M. (1993). Framing: Toward clarification of a fractured paradigm. Journal of Communication, 43(4), 51–58.

- Hartmann, M., van der Goot, R., Plank, B., & Basile, V. (2021). Framing and agenda-setting in Russian news: A computational analysis. NAACL Conference Proceedings.

- Manikonda, L., et al. (2022). Shift of user attitudes about anti-Asian hate on Reddit before and during COVID-19. ACM Web Science Conference.

- Mendelsohn, D., Budak, C., & Jurgens, D. (2021). Modeling framing in immigration discourse on social media. NAACL-HLT Conference.

- Milhazes-Cunha, J., & Oliveira, L. (2025). The relationship between incivility and negative emotions in conversations about immigrants on Reddit. ECSM Proceedings.

- Milner, C. A. (2020). Re-constructing borders through social news media: The normalization of immigrant exclusion within dominant discourse on Reddit. Honours thesis, Mount Allison University.

- Muis, J., & Reeskens, T. (2022). Are we in this together? Changes in anti-immigrant sentiments during the COVID-19 pandemic. International Journal of Intercultural Relations, 86, 54–68.

- Peckford, A. (2023). Distrust, conspiracy, and hostility: Using sentiment analysis to explore right-wing extremism in a Canadian subreddit. Master’s thesis, Simon Fraser University.

- Rowe, F., Mahony, S., Graells-Garrido, E., Rango, M., & Sievers, N. (2021). Using Twitter to track immigration sentiment during early stages of the COVID-19 pandemic. Data & Policy, 3, e36.

- Vitiugin, F., Lee, S., Paakki, H., Chizhikova, A., & Sawhney, N. (2024). Unraveling code-mixing patterns in migration discourse: Automated detection and analysis of online conversations on Reddit. arXiv:2406.08633.

- Bond, R. M., Fariss, C. J., Jones, J. J., Kramer, A. D. I., Marlow, C., Settle, J. E., & Fowler, J. H. (2012). A 61-million-person experiment in social influence and political mobilization. Nature, 489(7415), 295–298.

- Boydstun, A. E., Card, D., Gross, J. H., Resnik, P., & Smith, N. A. (2014). Tracking the development of media frames within and across policy issues. Annual APSA Meeting. https://homes.cs.washington.edu/~nasmith/papers/boydstun+card+gross+resnik+smith.apsa14.pdf

- DiResta, R. (2025). How social media can shape public opinion. Georgetown McCourt School of Public Policy. https://www.georgetown.edu/news/ask-a-professor-renee-diresta-how-social-media-can-shape-public-opinion/

- Entman, R. M. (1993). Framing: Toward clarification of a fractured paradigm. Journal of Communication, 43(4), 51–58. https://fbaum.unc.edu/teaching/articles/J-Communication-1993-Entman.pdf

- Hartmann, M., van der Goot, R., Plank, B., & Basile, V. (2021). Framing and agenda-setting in Russian news. NAACL-HLT. Association for Computational Linguistics.

- Huang, K., Pak, N., Bernstein, M., & Luca, M. (2024). Political bias in content moderation on Reddit. Michigan Ross School of Business. https://michiganross.umich.edu/news/new-study-reddit-explores-how-political-bias-content-moderation-feeds-echo-chambers

- Manikonda, L., Beigi, G., Kambhampati, S., & Liu, H. (2022). Shift of user attitudes about anti-Asian hate on Reddit before and during COVID-19. ACM Web Science Conference.

- Mazzoleni, G., & Bracciale, R. (2019). Socially mediated populism: The communicative strategies of political leaders on Facebook. Palgrave Macmillan.

- Mendelsohn, J., Budak, C., & Jurgens, D. (2021). Modeling framing in immigration discourse on social media. NAACL-HLT. https://aclanthology.org/2021.naacl-main.179.pdf

- Milhazes-Cunha, J., & Oliveira, L. (2025). The relationship between incivility and negative emotions in conversations about immigrants on Reddit. ECSM.

- Milner, C. A. (2020). Re-constructing borders through social news media. Mount Allison University.

- Muis, J., & Reeskens, T. (2022). Anti-immigrant sentiments during COVID-19. International Journal of Intercultural Relations, 86, 54–68.

- Peckford, A. (2023). Using sentiment analysis to explore right-wing extremism in a Canadian subreddit. Simon Fraser University.

- Pew Research Center. (2016). Reddit news users more likely to be male, young, and digital in their news preferences. https://www.pewresearch.org/journalism/2016/02/25/reddit-news-users-more-likely-to-be-male-young-and-digital-in-their-news-preferences/

- Rowe, F., Mahony, S., Graells-Garrido, E., Rango, M., & Sievers, N. (2021). Tracking immigration sentiment using Twitter. Data & Policy, 3, e36.

- Vitiugin, F., Lee, S., Paakki, H., Chizhikova, A., & Sawhney, N. (2024). Code-mixing patterns in migration discourse. arXiv:2406.08633.

- Bail, C. A. (2016). Combining NLP and network analysis to study advocacy organizations. PNAS, 113(42), 11823–11828.

- Chong, D., & Druckman, J. N. (2007). Framing theory. Annual Review of Political Science, 10, 103–126.

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. NAACL-HLT, 4171–4186.

- King, G., Lam, P., & Roberts, M. E. (2017). Computer-assisted keyword and document set discovery. American Journal of Political Science, 61(4), 971–988.

- Küçük, D., & Can, F. (2020). Stance detection: A survey. ACM Computing Surveys, 53(1), 1–37.

- Lakoff, G. (2004). Don't think of an elephant! Know your values and frame the debate. Chelsea Green Publishing.

- Wehrmann, J., Cerri, R., & Barros, R. (2018). Hierarchical multi-label classification networks. ICML, 5075–5085.

- Yang, P., Sun, X., Li, W., Ma, S., Wu, W., & Wang, H. (2018). SGM: Sequence generation model for multi-label classification. COLING, 3915–3926.